The Virtual Sound Server, or VSS, is a powerful sound synthesizing tool. It was developed in 1993 by the Audio Group at NCSA for use in the CAVE, a complex virtual reality environment used for research at several universities including the University of Illinois at Urbana-Champaign. Unfortunately, when UIUC began building the CUBE, a more advanced virtual reality environment than the CAVE, VSS was not ported over. However, VSS, even ten years later remains a very useful tool in creating sound for C and C++ applications such as those used in the CAVE and the CUBE. As such, this project was started to bring VSS back into the foreground for development of applications in the Math 198 Hypergraphics class.

There are two main sides to VSS: the client side and the server side. The client side basically consists of one or more programs that send messages to the server, which in turn processes messages from the client side application and does the actual synthesis of sound. For the purposes of application development, the server is merely meant to be run on a host computer and left untouched, while the user runs a client side application from another computer connected to the host.

To connect and send messages to the server, a client must use an aud file, a special text file that dictates what messages are sent to the server. This audfile is written with special syntax so it can interface with C and C++ programs, and is not unlike assembly language in that it requires a certain degree of low level microprogramming to make a versitile sonified client side application. For examples of aud files and more help on learning how to create them, see References

Within the structure of VSS, there is a heirarchy of objects needed to create sound. At the very top are objects called "Actors". These actors are basically the head of a family of sounds. At the actor level, one can make new sound objects (as children of the original actor) called "handlers". One can also control certain aspects of all children of an actor, such as amplitude, gain, center frequency, etc. by using the actor itself. A user can also control these properties by just using each handler as well.

Actors are also meant to send messages to other actors and handlers; for example, a FilterActor takes as input the data sent out by a sound producing handler to create a filtered version of these sounds. Actors like the FiltorActor are called "Processor Actors" while actors like the FluteActor and ClarinetActor are called "Generator Actors". There are also other types of actors called "Control Actors" which don't necessarily produce sound, but instead facilitate the sending of messages between other actors. One example is the LaterActor, which takes a message and delays it by a certain amount of time set by the user. For a complete listing plus documentation of all actors, see References

Within the Control Actors, there is a very special and important actor called the MessageGroup. The MessageGroup is analogous to a subroutine in assembly or a function in C, C++, or Python. The developer of a sound application can add messages to this MessageGroup to make certain actors perform certain tasks when the MessageGroup is sent to the VSS server. Within the AddMessage command, there is also a special syntax that allows the interfacing between the audfile and a C++ program: any numeric parameter in the AddMessage command can be specified with a "*" followed by the array index of a data vector passed to the aud file through the C/C++ command AUDUpdate(...). It is solely in this way that a developer sonifies a C/C++ application, and it is a very powerful way indeed.

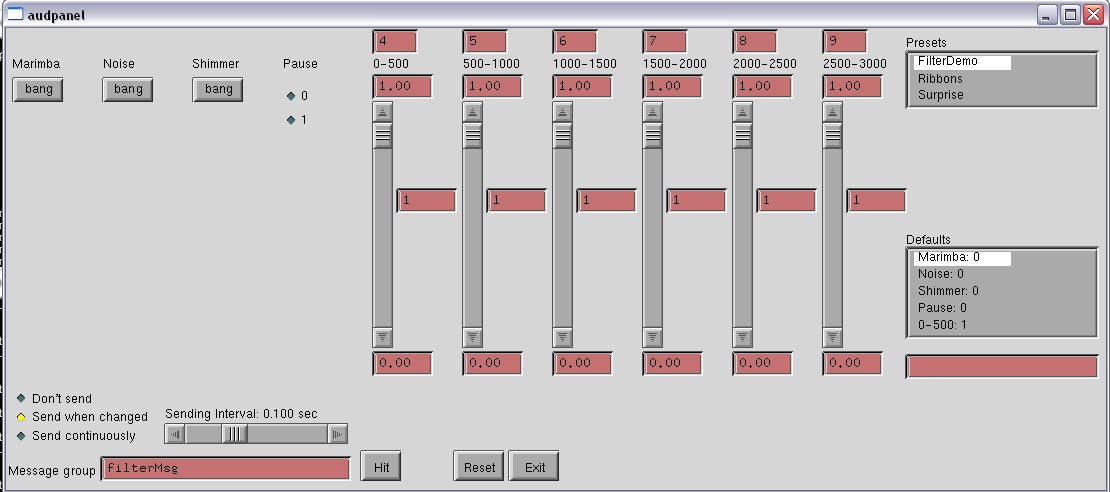

To familiarize myself with VSS, as well as satisfy some academic curiosity about filters, I created a (very long) audfile to implement a very basic equalizer as well as some basic actors. I also used a precompiled program called "audpanel" to test my audfiles. Audpanel was developed so that an application developer, like myself, would be able to separate the testing of the main part of an application from the sonification of said application. As this project was purely about sonification, it was sufficient to use the audpanel to develop the aud files needed to sonify existing applications; this way, the project became more centered around the aud files, which is the brunt of the programming needed for sonification of existing CUBE applications. Below is an image of the audpanel I developed to implement a graphical equilizer.

Note that the implementation of the graphical interface was not my work; I merely wrote a small amount of code that the Audpanel program parsed to created this GUI. My full .ap code for this audpanel is linked in my Code Repository

This audpanel is fairly simple in design; you choose the type of sound that you want via the buttons on the left, and set the equilizer settings for the sound on the right. Although the audpanel design itself is simple, the aud file I developed using this audpanel can be easily implemented into a more complex C/C++ program, merely by calling AUDUpdate(...) with the parameters I allowed the user of the audpanel to control. For my full analysis of this part of my project, see my project writeup here

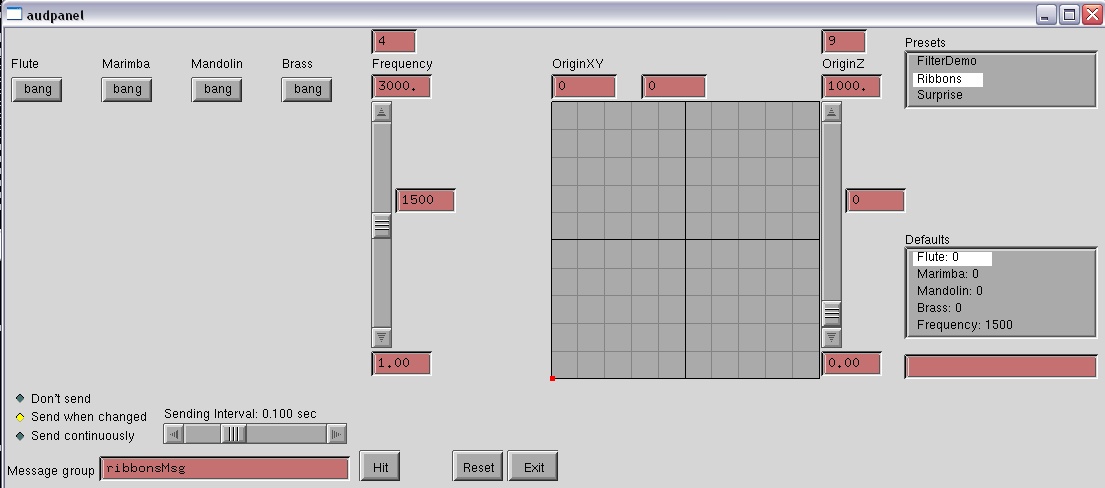

To fully test the use of VSS in the CUBE, I planned to, with the help of Camille Goudeseune use VSS to sonify the application "Ribbons", a program in which the user waves a wand to create three-dimensional drawings, not unlike the paint programs found native to several operating systems. To do this, I used another audpanel to develop an audfile that would create a suitable sonification of "Ribbons".

Unfortunately, as of now, although the VSS server is fully functional in the CUBE, the client side programs repeatedly fail to link, due to the fact that VSS was developed at least a decade ago, using SGI computers that are now obsolete. As such, I will not be able to truly get VSS working in the CUBE just yet.

UPDATE (11dec09): Camille is currently working on the problem of linking VSS with Aszgard (the development platform used in the CUBE). No news yet on whether any progress has been made.

Homepage for VSS (VSS download plus more references)

Guide to adding sound to your C/C++ application

Reference manual to VSS 3.0 (includes Actor descriptions)

Audpanel file for equilizer and ribbons simulator

Audfile for equilizer and ribbons simulator

> Readme for project